Using AI to build AI

Journey into Generative AI Development

I've have lived through several technology epochs in my career: pre-Internet (coding with floppy disks and tapes), Web 1.0, and Web 2.0 (cloud and mobile). With each transition, I've found hands-on learning essential to properly understand the capabilities and implications of emerging technologies. While blockchain created its own ecosystem, it didn't fundamentally change enterprise development. However, generative AI represents something different - a fundamental shift in how we design, build, govern, and manage systems.

There's significant hype around AI currently, so my approach is to "plan for the worst, hope for the best" by developing a deep understanding of these systems.

The Questions We Need to Answer

As a technology leader, I need to confidently answer questions that CIOs and executives will ask during sales processes:

- Which AI tools should engineers be using?

- Is the investment cost worth it?

- What will the benefits be beyond anecdotal claims of 15% improvement?

- How do we reconcile this with the recent DORA report suggesting things are actually as productive as they seem?

- What will the costs be as these tools move from free trials to subscription models or payasyou go?

- Are these tools only good for POCs, or can they address enterprise challenges like legacy modernisation?

- What does a development team look like in this new paradigm?

- How do we incorporate LLMs into application and business process workflows and what is the impact of having a none-deterministic system?

Learning Objectives

My experimentation had several key objectives:

- Understand what it means to use an LLM in an application

- Grasp prompt engineering and content generation

- Explore the implications of non-deterministic systems

- Determine how to govern AI within an SDLC framework

- Understand differences between models

- Learn "vibe coding" (a term that didn't even exist when I started in February)

- Identify where Gen AI fits in the development workflow

My First Project: Building an AI-Powered Git Commit Tool

For my initial project, I wanted to build a bot to generate Git commit messages using an LLM, and I decided to implement it in Rust—a language I had never used before. This approach allowed me to hit two learning objectives simultaneously.

Development Environment

I evaluated several tools:

- GitHub Copilot (at the time, it was primarily advanced IntelliSense)

- Cursor and WinSurf (paid subscriptions that abstract the cost aspect)

- Cline (open source, created by Anthropic)

- OpenRouter (providing access to multiple models through Amazon Bedrock)

I ultimately chose Cline because it showed the cost of every query, providing transparency that aligned with my learning goals.

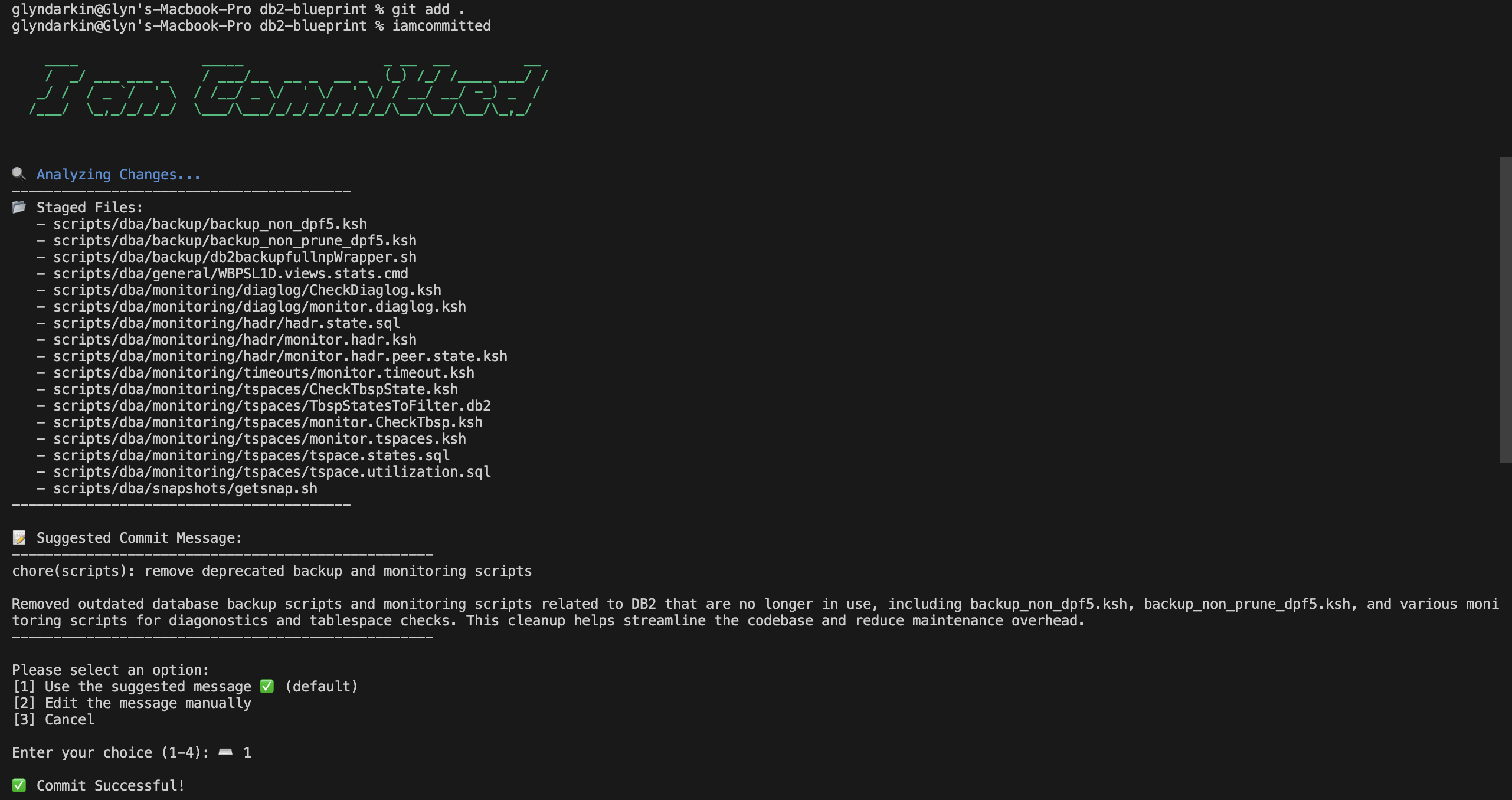

The Application: "I Am Committed"

I built a command-line application that:

- Analyses Git Diffs

- Looks at staged files

- Generates a message based on conventional commits

- Provides options to push the commit, edit the message manually, or cancel

The architecture was simple but effective: a Rust CLI backed by the OpenAI platform using GPT-4 Mini.

Surprises and Lessons

Surprise #1: It Actually Worked

My first attempt at vibe coding was surprisingly successful. Despite minimal prompt engineering and even a spelling mistake, it generated a functional Rust application. This was a profound moment that reinforced how fundamentally the world had changed.

Can you please set up my environment to be able to build a RUST based commpand line application?

Surprise #2: It Can Also Break Things

About two hours later, another simple prompt:

How can I read a line form the command line? specifically I want to give the user 3 options to select from and the user picks 1 option.

and the AI completely wiped out all my code. This highlighted the need to start working properly and setting up a proper development workflow:

- Breaking work into micro-tasks

- Saving and committing changes frequently

This also lead to me having to be more thoughtful about how I code. My traditional approach has bene to treat code more like clay, moulding it to to want I wanted it to be, but learning as I go. But the interaction with the AI requires me to be more specific, which got me thinking about development by specification. Something that is emerging as a way of owkring and something that tooling is starting to be built around. Tessl

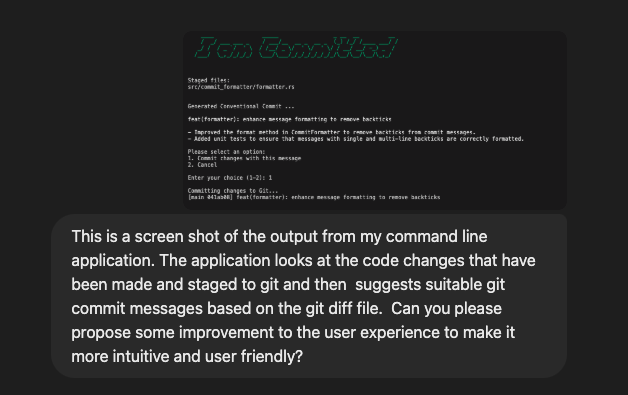

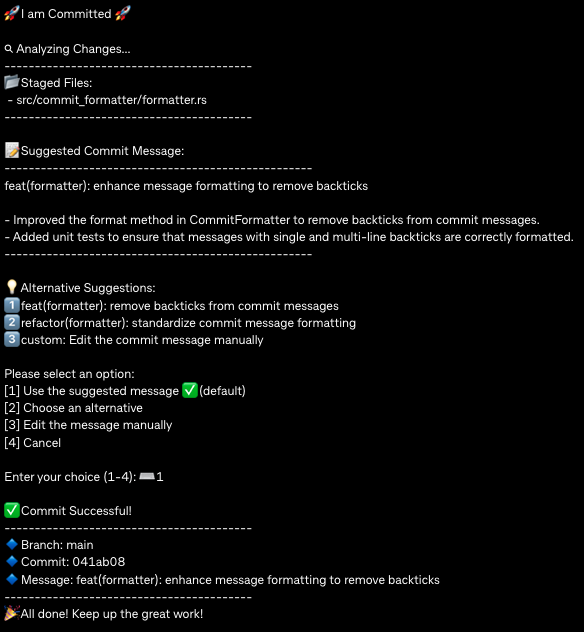

Surprise #3: UI Generation Capabilities

When I showed the AI a screenshot of my basic CLI interface and asked for something better, it generated a significantly improved interface.

Original Interface & Prompt

I implemented this by copying the generated text into a markdown file and referenced it in my next prompt. Interestingly, it stubbed out functionality that didn't exist yet without explicitly telling me, reinforcing the importance of careful code review.

Feature Development Process

As I continued development, I incrementally added features through simple one-line requests to the AI:

- Pluggable prompts stored in JSON files

- Implementing logging

- Adding unit tests

- Refactoring from a single long method into properly structured classes

- Editable response capabilities

- Displaying changed files

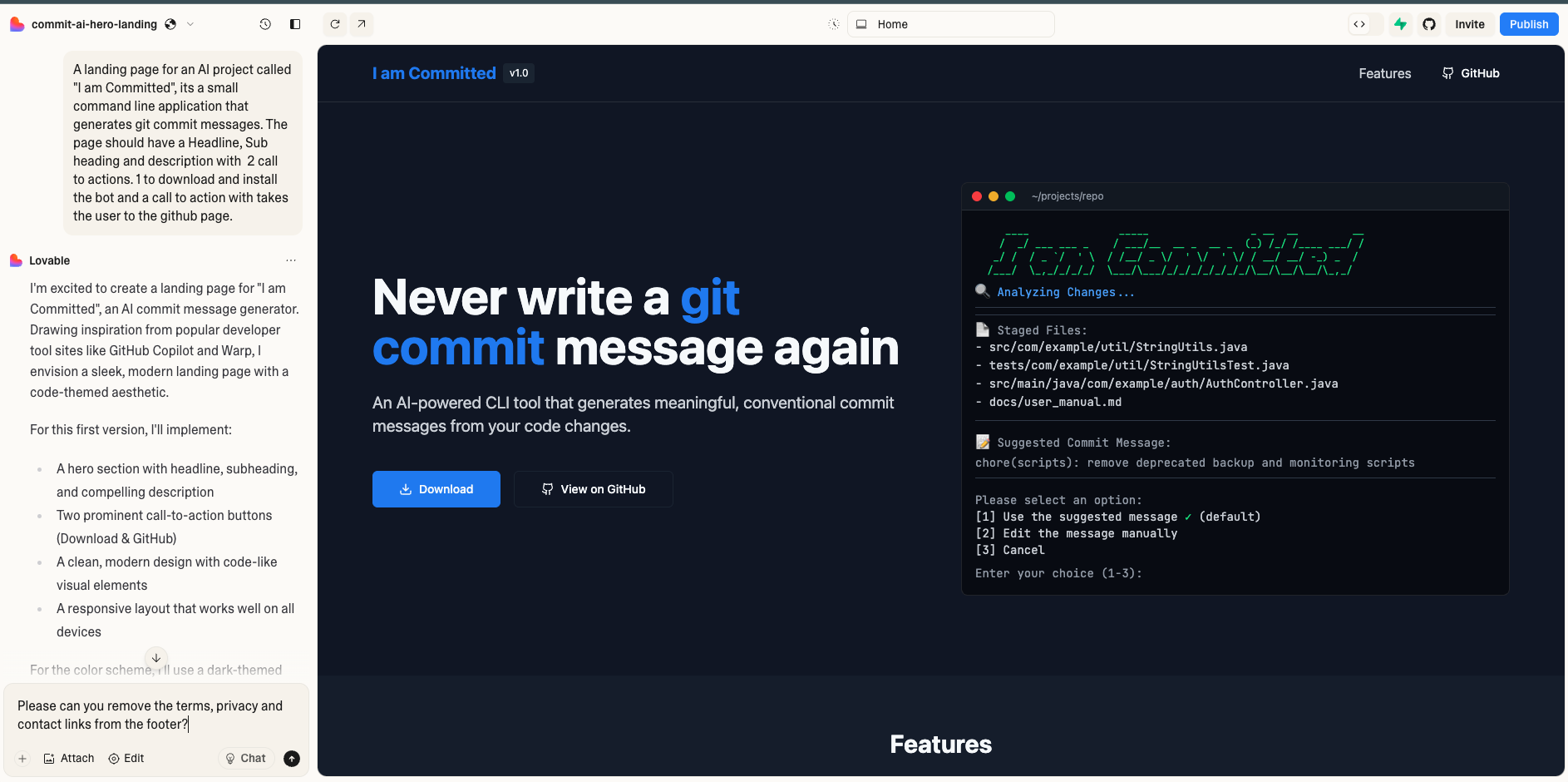

Landing page

For my landing page, I used lovable.dev (created by the team behind Figma). By providing a screenshot, it generated a complete landing page that I could sync to GitHub and further modify. This demonstrated how AI tools could compensate for missing skillsets in a team—many of our projects lack dedicated front end engineers and designers, but these tools can generate a solid first iteration.

https://commit-ai-hero-landing.lovable.app/

Insights and Thoughts

Cost Considerations

My most expensive task cost $3, which is minimal for a small application. However, this could scale quickly with larger codebases. Understanding token costs and determining when to switch to on-premise solutions (like using Llama or other open-source models) will be crucial for enterprise implementations. Subscription products like Cursor and Windsurf actually use a lot of caching and token constraints to make sure that their costs do not spiral out of control. Tools like Cline give you the real token and cost count.

Development Workflow

Working with AI requires:

- #architecture Understanding your system architecture upfront

- 100% #codereview

- Potentially different #testing modalities

- #IntentionalLearning (if you don't review the code and understand what's happening, you won't learn)

Challenges with LLM Integration

Several key challenges emerged:

- #Hallucinations are real : How do you design an enterprise system where components might "make up" answers?

- #ModelSelection: I struggled to determine which models were better than others and how to conduct proper triage.

- #CostTracking: OpenAI requests tell you tokens but not costs—how do enterprises account for and estimate these expenses?

- #Feedbackloops : Systems need built-in feedback mechanisms to improve prompt quality over time.

- API standardisation: OpenAI's SDK is becoming a protocol standard, but using abstraction layers like Open Router or Halo provides more control over costs and model switching.

I wonder if there's potential for "model arbitrage"—switching between platforms (Google's Gemini, DeepSeek, Llama) based on cost fluctuations, similar to how enterprises leverage spot/reserve instances in cloud environments.

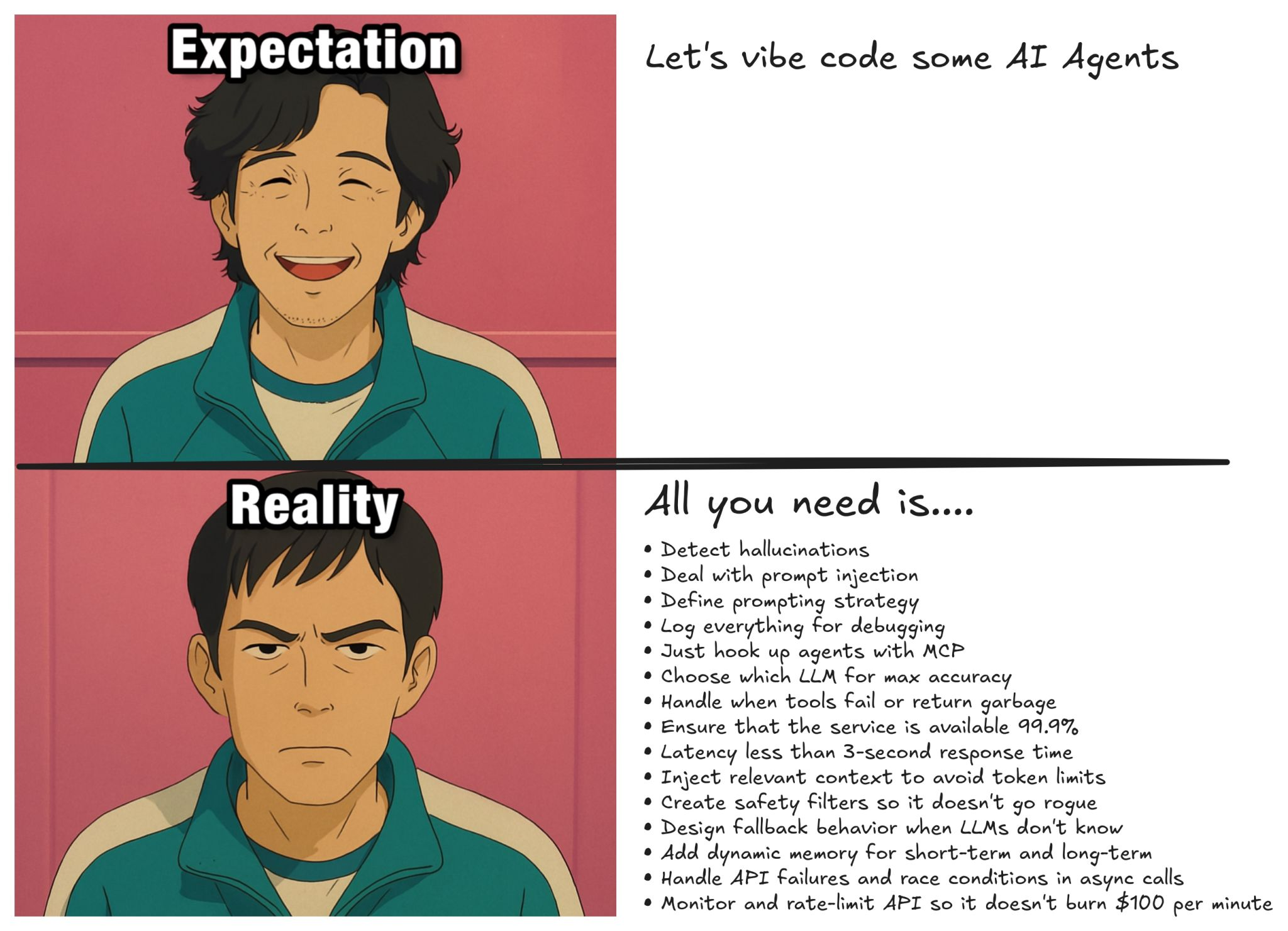

The Expanding Responsibility Space

What's fascinating is how quickly the narrative has evolved from "AI will take our jobs" to recognising all the new responsibilities these systems create:

- Hallucination detection and mitigation

- Prompt injection prevention

- Prompt strategy development

- Observability frameworks

- Machine-Callable Protocols (MCP)

- Accuracy verification

Rather than eliminating roles, these technologies are creating work that aligns perfectly with platform engineering, testing, and QA/QC disciplines.

Next Research Areas

My next areas of exploration include:

- Advanced configuration: Exploring Cline's configuration settings for more agentic development approaches

- Model evaluation: Comparing different models and determining if my simple use case needs an LLM trained on the entirety of the internet

- Caching strategies: Understanding how to optimize performance and costs

- Machine-Callable Protocols (MCP): Building "I Am Released" to generate release notes by using MCP to pull Git commit messages from a repository

Final Thoughts

Productivity and Quality Implications

Vibe coding will definitely increase productivity for basic applications—that's undeniable. However, more productivity means more applications, which likely means more security flaws and quality issues. I predict a substantial business opportunity in 2-3 years for companies to fix hastily vibe-coded applications experiencing problems in production.

Team Composition Changes

Previously, enterprise application development required specialized roles: backend engineers, frontend engineers, DevOps engineers, cloud engineers, test engineers, UX designers, content writers, and visual designers. How many of these distinct roles will still be necessary when agents can handle a large portion of the work?

As enterprises focus on cost reduction, will we see smaller teams taking products through V1, V2, and V3 before requiring full specialised teams?

Conclusion

These tools are both productivity enhancers and learning platforms, but learning must be intentional. The complexity of incorporating AI into enterprise systems is substantial but navigating these challenges will be critical to our future success.

My advice: enjoy learning, have fun, and be kind. The landscape is evolving rapidly, and maintaining an experimental mindset while sharing knowledge will be essential as we collectively figure out how to harness these powerful new capabilities.

To install I-am-Committed head on over to the Landing page. If you want to have a look at the source code or contribute to the project have a look at GItHub.

This blog post is based on a presentation given on May 20, 2025, documenting my learning journey in building an AI-powered Git commit message generator.

Research Links

- https://prompts.chat/

- https://github.com/Nutlope/aicommits/blob/develop/src/utils/prompt.ts

- https://github.com/PickleBoxer/dev-chatgpt-prompts

- https://github.com/brexhq/prompt-engineering

- https://defra.github.io/defra-ai-sdlc/

sort of written by a human